Difference between revisions of "Slurm Quick Start"

(Created page with "== Slurm Cluster Quick Start Guide ==") |

|||

| Line 1: | Line 1: | ||

| + | This page explains how to get the configure and run a batch job on the SLURM cluster from the head node. |

||

| − | == Slurm Cluster Quick Start Guide == |

||

| + | |||

| + | == 1. Configuring SLURM == |

||

| + | Slurm has 2 entities: a slurmctld controller node and multiple slurmd host nodes where we can run jobs in parallel. |

||

| + | |||

| + | To start the slurm controller on a machine we need to give this command from root: |

||

| + | $ systemctl start slurmctld.service |

||

| + | After running this, we can verify that slurm controller is running by viewing the log with the command: |

||

| + | $ tail /var/log/slurmctld.log |

||

| + | The nodes should be configured to run with the controller. You can see the information about available nodes using this command: |

||

| + | $ sinfo |

||

| + | [[File:Sinfo.png|left|frameless|509x509px]] |

||

| + | |||

| + | |||

| + | |||

| + | == 2. Running a Slurm Batch Job on Multiple Nodes == |

||

| + | We can create python scripts and run these scripts in parallel on the cluster nodes. This is a simple example where we will print the task number from each node. |

||

| + | |||

| + | === Create a Python Script === |

||

| + | First we create a Python script which prints the system task number: |

||

| + | #!/usr/bin/python |

||

| + | # import sys library (needed for accepted command line args) |

||

| + | import sys |

||

| + | # print task number |

||

| + | print('Hello! I am a task number: ', sys.argv[1]) |

||

Revision as of 10:24, 29 November 2022

This page explains how to get the configure and run a batch job on the SLURM cluster from the head node.

1. Configuring SLURM

Slurm has 2 entities: a slurmctld controller node and multiple slurmd host nodes where we can run jobs in parallel.

To start the slurm controller on a machine we need to give this command from root:

$ systemctl start slurmctld.service

After running this, we can verify that slurm controller is running by viewing the log with the command:

$ tail /var/log/slurmctld.log

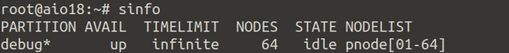

The nodes should be configured to run with the controller. You can see the information about available nodes using this command:

$ sinfo

2. Running a Slurm Batch Job on Multiple Nodes

We can create python scripts and run these scripts in parallel on the cluster nodes. This is a simple example where we will print the task number from each node.

Create a Python Script

First we create a Python script which prints the system task number:

#!/usr/bin/python

# import sys library (needed for accepted command line args)

import sys

# print task number

print('Hello! I am a task number: ', sys.argv[1])