Configuring Ipython for Parallel Computing

Step 1. From Terminal run following command to create a parallel profile

$ ipython3 profile create --parallel --profile=myprofile

If this is completed successfully, then there should be some config files like ipcontroller_config.py, ipcluster_config.py, etc in the folder <NetID>/.ipython/profile_myprofile. Check this folder, if you are missing these config files, there might be some issue with the installation of IPython on the system.

Step 2. We modify ipcluster_config.py so that engines are launched in remote machines and controller is launched on local machine

$ cd <NetID>/.ipython/profile_myprofile

$ vi ipcluster_config.py

Now in this file we modify the following (uncomment the line and make the changes as shown):

c.Cluster.engine_launcher_class = 'ssh' -->line 528

c.SSHControllerLauncher.controller_args = ['--ip=*'] -->line 1689

c.SSHEngineSetLauncher.engines = {'pnode10':2,'pnode11':2} -->line 2423

Save the file and exit. Note that in the c.SSHEngineSetLauncher.engines field, you need to put which machines (ramsey,fibonacci,etc) or nodes (pnode01,02,etc) and how many kernels you want from each. In my example I have taken 2 each from pnode10 and pnode11

Next, in file ipcontroller_config.py in the same location:

c.IPController.ip = '0.0.0.0'

Save and exit file. This step is important because otherwise the controller won't be able to listen on the engines and no parallel computation will happen.

Step 3. With this you should have parallel computing set up. Examples with IPython and MPI

Only IPython

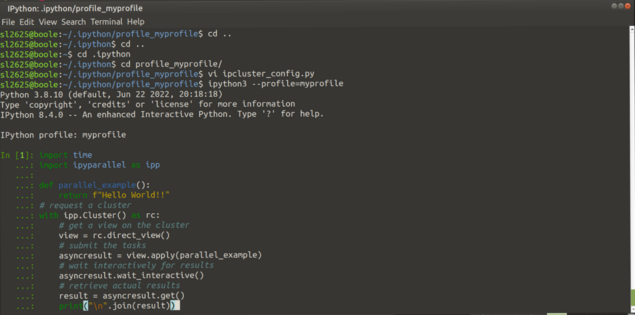

Launch IPython with the profile you created and edited:

$ ipython3 --profile=myprofile

Code:

import time

import ipyparallel as ipp

def parallel_example():

return f"Hello World!!"

# request a cluster

with ipp.Cluster() as rc:

# get a view on the cluster

view = rc.load_balanced_view()

# submit the tasks

asyncresult = view.map_async(parallel_example)

# wait interactively for results

asyncresult.wait_interactive()

# retrieve actual results

result = asyncresult.get()

print("\n".join(result))

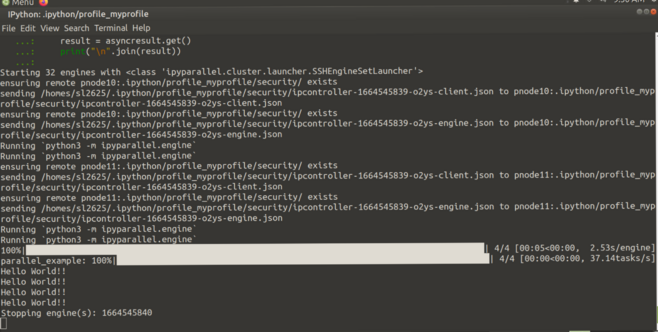

On execution of this code:

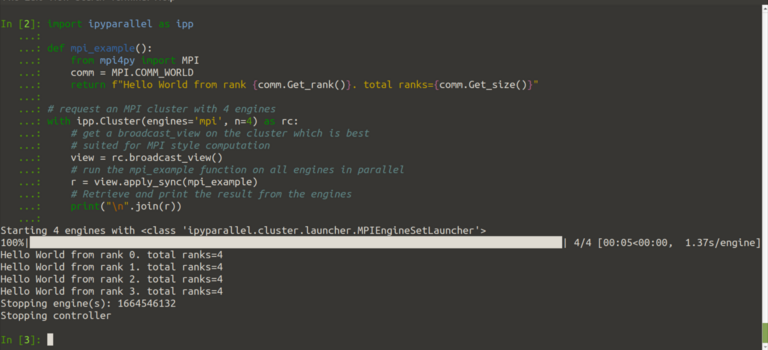

Example with MPI

Code (taken from https://ipyparallel.readthedocs.io/en/latest/):

import ipyparallel as ipp

def mpi_example():

from mpi4py import MPI

comm = MPI.COMM_WORLD

return f"Hello World from rank {comm.Get_rank()}. total ranks={comm.Get_size()}"

# request an MPI cluster with 4 engines

with ipp.Cluster(engines='mpi', n=4) as rc:

# get a broadcast_view on the cluster which is best

# suited for MPI style computation

view = rc.broadcast_view()

# run the mpi_example function on all engines in parallel

r = view.apply_sync(mpi_example)

# Retrieve and print the result from the engines

print("\n".join(r))

Result: